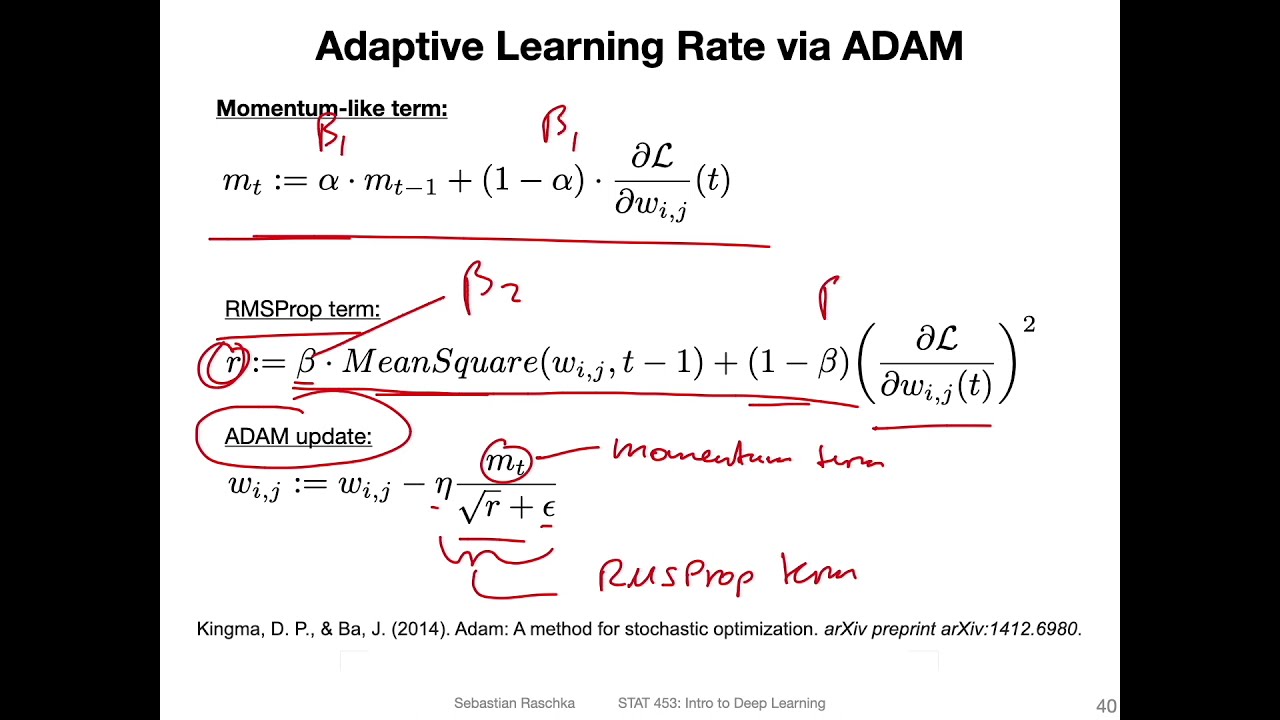

Gentle Introduction to the Adam Optimization Algorithm for Deep Learning - MachineLearningMastery.com

Learning Rate Grafting: Transferability of Optimizer Tuning (Machine Learning Research Paper Review) - YouTube

Why we call ADAM an a adaptive learning rate algorithm if the step size is a constant - Cross Validated

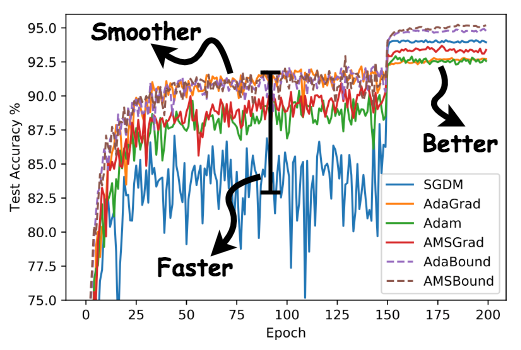

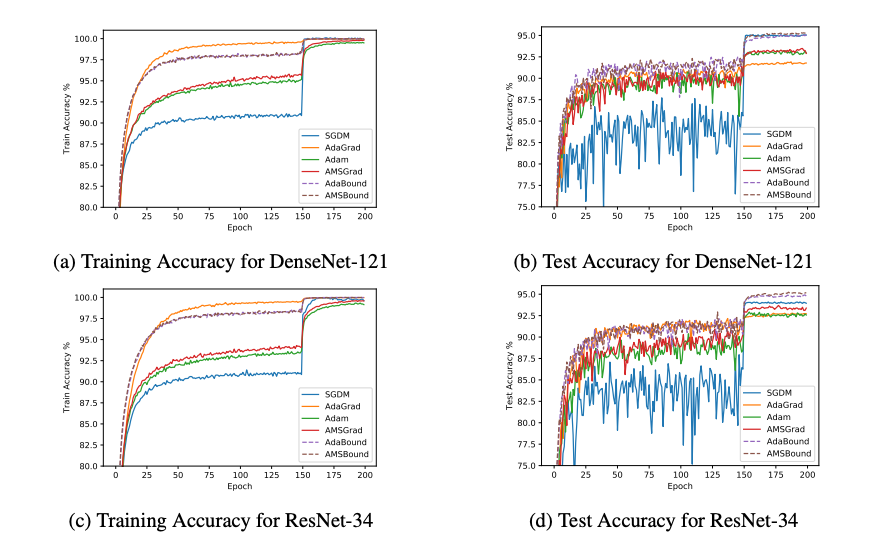

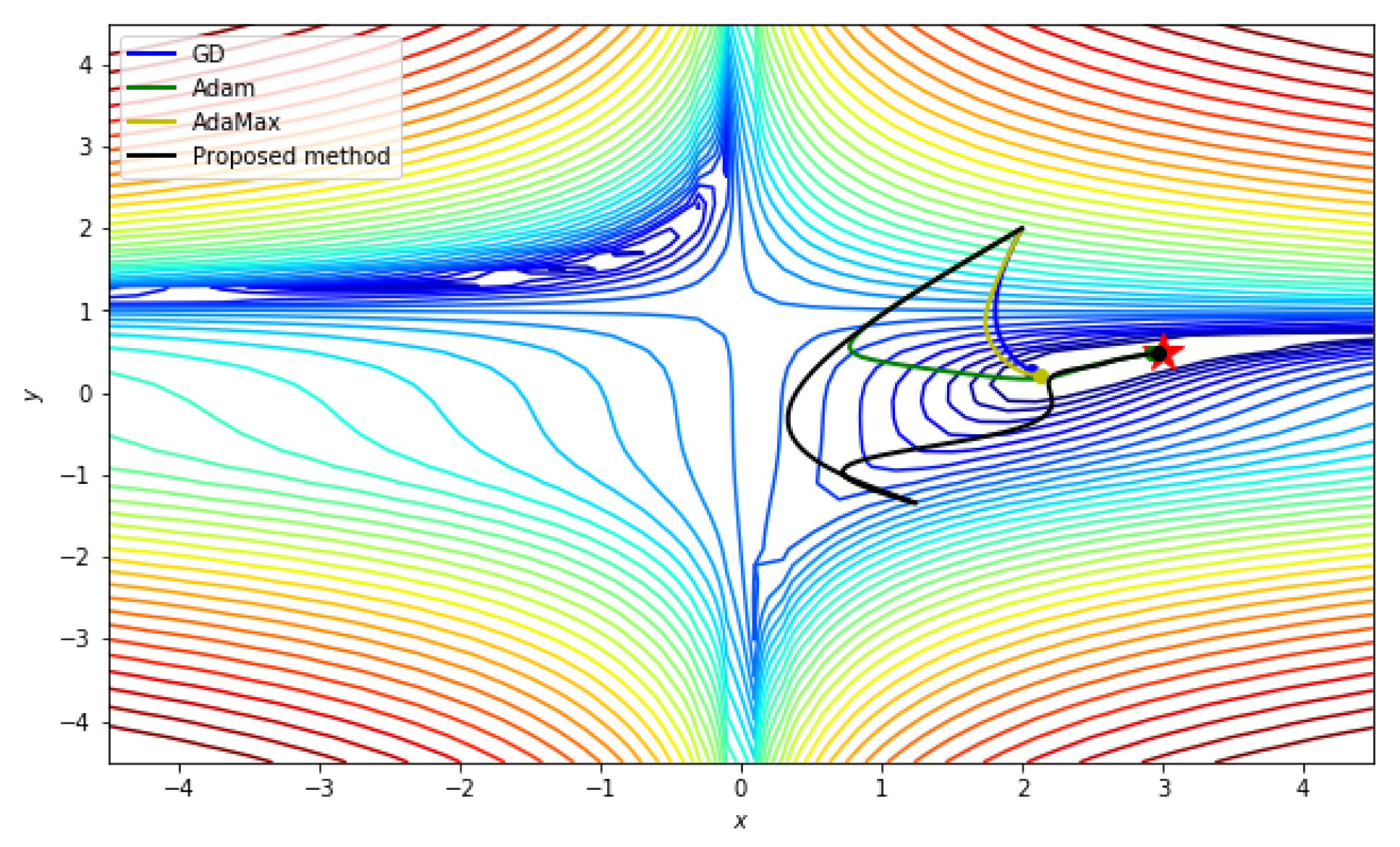

ICLR 2019 | 'Fast as Adam & Good as SGD' — New Optimizer Has Both | by Synced | SyncedReview | Medium

Tests of Eurosat dataset using Adam optimizer with 0.0005 learning rate... | Download Scientific Diagram

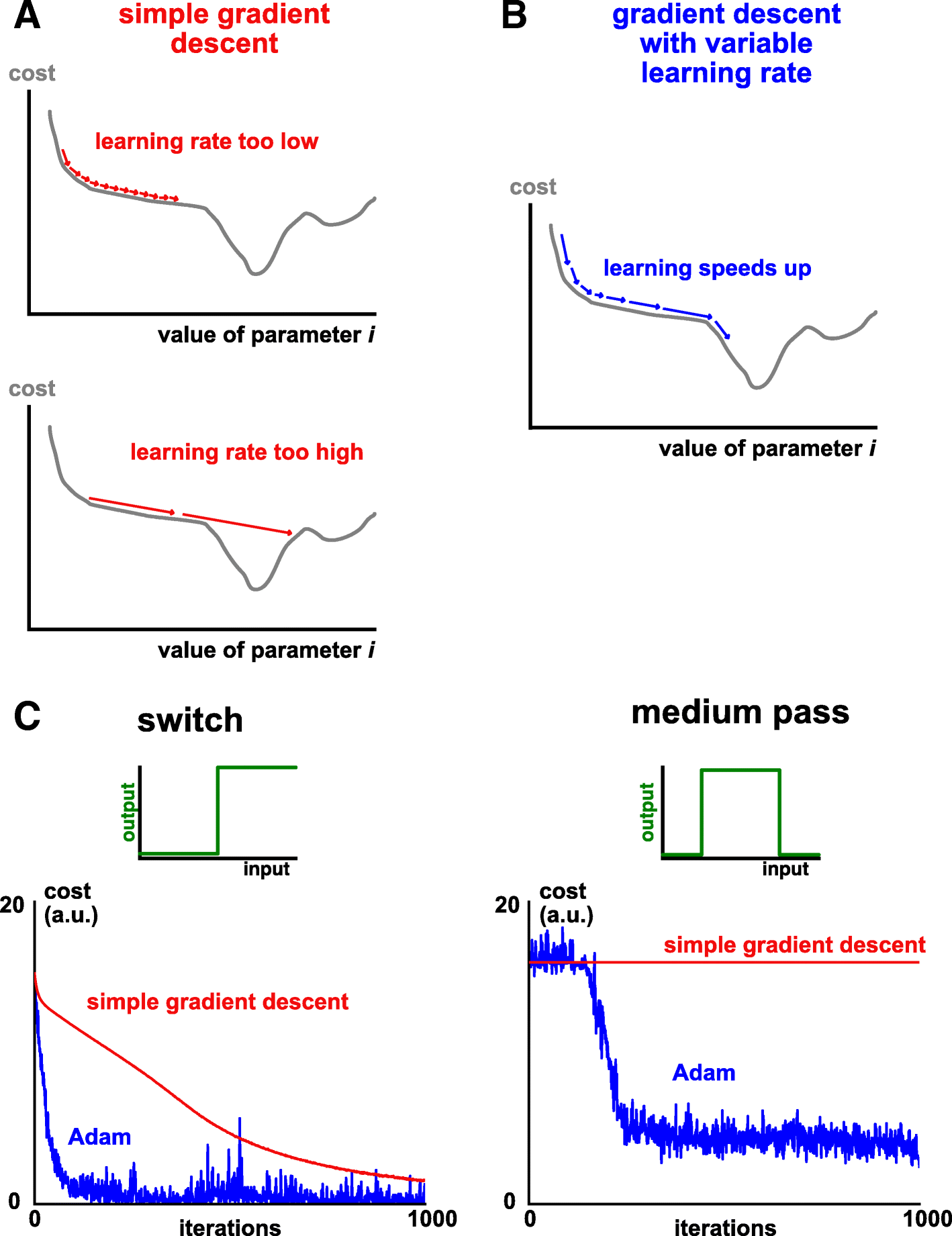

Applied Sciences | Free Full-Text | An Effective Optimization Method for Machine Learning Based on ADAM

Different learning rates of the Adam optimizer in TensorFlow for the... | Download Scientific Diagram

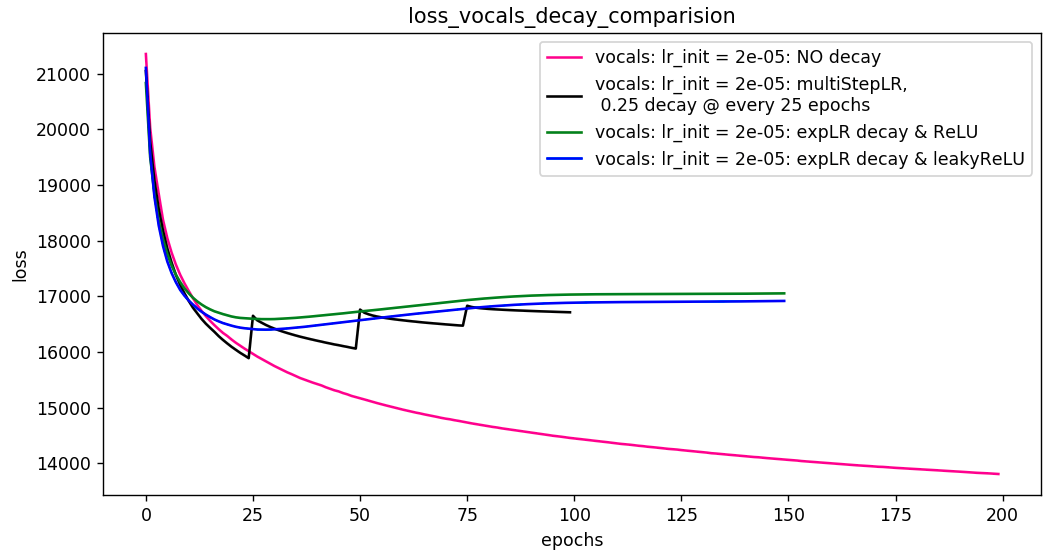

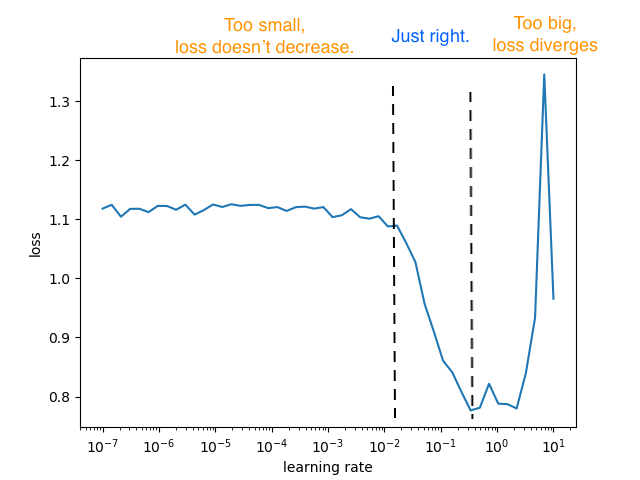

04B_07. Learning Rate and Learning Rate scheduling - EN - Deep Learning Bible - 2. Classification - English